❶ Introduction

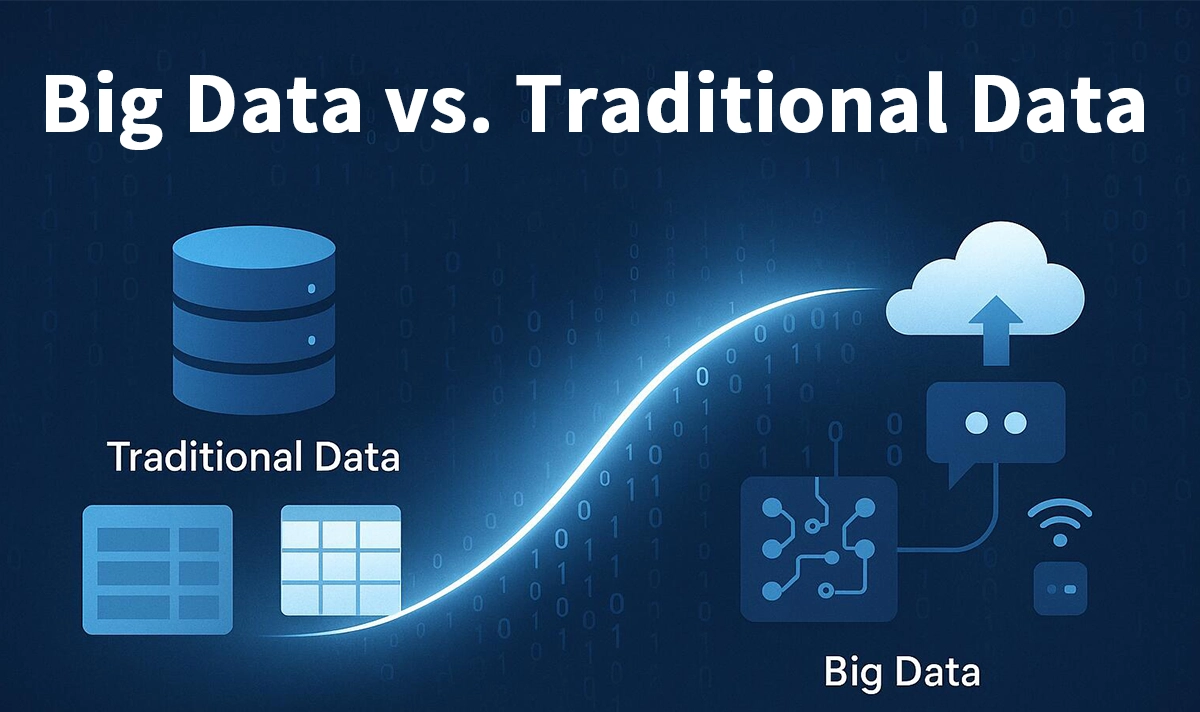

Data has always been the foundation of decision-making, but the way we collect, store, and analyze data has changed dramatically. Today, organizations distinguish between traditional data — structured, smaller-scale, and manageable within relational databases — and Big Data, which is vast, complex, and often unstructured.

Understanding the differences between these two categories is essential for businesses planning digital transformation, adopting AI, or scaling their analytics capabilities. In this article, we’ll break down the key differences between Big Data and Traditional Data, and explore how modern networking technologies, including optical transceivers from LINK-PP, help organizations manage the shift.

❷ What Is Traditional Data?

Traditional data refers to datasets that are:

Structured: Stored in relational databases with defined rows and columns.

Manageable Size: Typically measured in MBs or GBs, handled by single-server setups.

Static: Data updates are less frequent and usually batch-processed.

Low Velocity: Generated at predictable rates (e.g., sales records, customer profiles).

Traditional data works well for small to mid-sized businesses using ERP systems, CRMs, and financial applications.

❸ What Is Big Data?

Big Data, on the other hand, is characterized by the 5Vs:

Volume: Massive amounts of data, often measured in TBs or PBs.

Velocity: Generated and processed in real-time or near real-time.

Variety: Includes structured, semi-structured, and unstructured data (e.g., IoT sensors, social media, images, videos).

Veracity: Data may be uncertain or inconsistent, requiring advanced processing.

Value: Insights extracted from Big Data drive business intelligence, AI, and predictive analytics.

Big Data environments rely on distributed storage (e.g., Hadoop, cloud platforms) and high-speed networking to manage enormous datasets.

❹ Big Data vs Traditional Data: Key Differences

Feature | Traditional Data | Big Data |

|---|---|---|

Data Type | Structured (tables, rows, columns) | Structured + Unstructured + Semi-structured |

Size | MBs to GBs | TBs to PBs and beyond |

Processing | Batch processing, SQL queries | Real-time, parallel, and distributed |

Storage | Relational databases (RDBMS) | NoSQL, Hadoop, distributed file systems |

Velocity | Slow, predictable | Fast, continuous, high-frequency streams |

Use Cases | Financial records, ERP, CRM |

❺ Why Infrastructure Matters

The transition from traditional data management to Big Data analytics cannot succeed without scalable infrastructure. This includes high-performance servers, distributed storage, and most importantly, high-bandwidth, low-latency connectivity.

Optical modules — such as SFP, SFP+, QSFP28, and 100G transceivers — ensure that massive datasets move quickly and securely between servers, storage systems, and cloud platforms.

👉 Explore LINK-PP’s optical transceivers and SFP modules designed for data centers and Big Data workloads.

❻ Use Cases

AI and Machine Learning: Require Big Data pipelines supported by high-speed interconnects.

IoT Deployments: Billions of devices generate continuous data streams that must be aggregated and analyzed.

Real-Time Analytics: From fraud detection to personalized recommendations, latency-sensitive workloads depend on fiber-based networking.'

❼ Conclusion

While Traditional Data continues to serve structured business processes, Big Data is essential for unlocking advanced analytics, AI, and IoT innovation. The key differences lie in scale, speed, and complexity — which in turn demand robust, future-ready infrastructure.

With its portfolio of high-performance optical transceivers, LINK-PP empowers organizations to seamlessly migrate from traditional data systems to Big Data environments, ensuring fast, reliable, and scalable connectivity.

👉 Learn more about LINK-PP’s optical module solutions here:

LINK-PP Optical Transceivers and SFP Modules

❽ FAQ

Q1: Is Big Data replacing Traditional Data?

A: Not exactly. Traditional data is still used for structured, transactional systems, while Big Data handles large, diverse, and real-time datasets. They often coexist in hybrid environments.

Q2: Why is Big Data important for businesses today?

A: Big Data enables real-time analytics, predictive insights, and personalized services, which are critical for competitiveness in digital markets.

Q3: What infrastructure do I need to support Big Data?

A: Organizations require distributed storage, high-performance servers, and optical networking modules for low-latency, high-bandwidth data transfers.

Q4: Can traditional databases handle Big Data?

A: Traditional relational databases struggle with the scale and complexity of Big Data. Modern platforms like Hadoop, Spark, and cloud-native databases are designed for these workloads.

Q5: How do LINK-PP optical modules support Big Data?

A: LINK-PP optical transceivers provide high-speed, reliable connections between servers, storage, and cloud systems, ensuring Big Data can be processed efficiently.