💡 In a nutshell: High Performance Computing (HPC) is the engine powering humanity's most ambitious scientific breakthroughs, complex simulations, and data-driven innovations. It's not just faster computing; it's computing at a scale that tackles problems impossible for standard machines. Think simulating galaxy formation, designing life-saving drugs in record time, or training the next generation of AI models. This guide dives deep into what HPC truly is, how it works, its transformative applications, and why it's the cornerstone of modern discovery.

Feeling overwhelmed by massive datasets or complex simulations grinding your standard systems to a halt? You're not alone. The relentless growth of data and computational demands has pushed traditional computing to its limits. High Performance Computing (HPC) emerges as the critical solution, transforming impossible tasks into achievable goals. But what is HPC, exactly? Let's demystify this powerhouse technology.

📝 Beyond the Desktop: Defining High Performance Computing (HPC)

At its core, HPC is the aggregation of computing power to solve complex problems requiring immense calculations or data processing at speeds far exceeding the capabilities of a typical desktop computer, workstation, or even a single powerful server. It's the realm of supercomputers and high performance computing clusters.

Scale: HPC systems combine hundreds, thousands, or even millions of processing cores (CPUs, GPUs, specialized accelerators).

Parallelism: The key to HPC's speed is parallel processing. Instead of tackling a problem sequentially (one step after another), HPC breaks it down into smaller tasks that can be computed simultaneously across many cores.

Speed: Measured in FLOPS (Floating Point Operations Per Second) – think billions (GigaFLOPS), trillions (TeraFLOPS), quadrillions (PetaFLOPS), and now quintillions (ExaFLOPS).

Specialized Infrastructure: HPC demands high-speed, low-latency interconnects (like InfiniBand or high-speed Ethernet), massive parallel file systems for handling vast datasets, sophisticated cooling solutions, and specialized software for managing workloads and parallel execution.

📝 The Engine Room: Key Components of an HPC System (HPC Architecture)

Understanding what is HPC requires peering under the hood. An HPC system, often referred to as a cluster, is a symphony of specialized components working in concert:

Compute Nodes: The workhorses. Each node is essentially a server containing multiple CPUs (Central Processing Units) and increasingly, GPUs (Graphics Processing Units) or other accelerators (like TPUs or FPGAs). GPUs excel at massively parallel calculations common in AI/ML, simulations, and graphics. Density is key – packing maximum compute power into minimal space.

High-Speed Interconnect: The nervous system. This ultra-fast network (InfiniBand, Omni-Path, or high-end Ethernet like 200GbE/400GbE) allows nodes to communicate and share data with extremely low latency (delay) and high bandwidth (data transfer rate). Network performance is often the single biggest factor determining overall cluster efficiency. This is where high-performance optical transceivers become critical. For example, LINK-PP's 200G QSFP56 optical modules (like the QSFP56-200G-SR4 for short reach or QSFP56-200G-DR4 for medium reach) provide the essential, reliable, high-bandwidth connectivity needed between top-of-rack switches and core routers in demanding HPC fabrics, minimizing bottlenecks.

Parallel Storage (File System): The vast memory. HPC deals with petabytes of data. Parallel file systems (like Lustre, IBM Spectrum Scale (GPFS), or BeeGFS) distribute data across many storage devices (HDDs, SSDs, NVMe), allowing multiple compute nodes to read and write simultaneously at incredible speeds. This is crucial for data-intensive computing.

Cluster Management Software: The conductor. Software stacks (e.g., Bright Cluster Manager, OpenHPC, SLURM, PBS Pro) handle resource scheduling (deciding which jobs run where and when), system monitoring, user management, and software environment provisioning.

Cooling & Power: The life support. HPC clusters generate immense heat and consume significant power. Advanced cooling solutions (liquid cooling, advanced air cooling) and robust, redundant power delivery are non-negotiable for stability and efficiency.

Table 1: Traditional Servers vs. HPC Compute Nodes - Key Differences

Feature | Traditional Enterprise Server | HPC Compute Node |

|---|---|---|

Primary Focus | General-purpose workloads, reliability, uptime | Raw computational speed, parallel processing |

Processing Power | Moderate CPU cores, often minimal GPU | High core count CPUs, Many powerful GPUs/Accelerators |

Memory (RAM) | Sufficient for business applications | Very High Capacity & Bandwidth (HBM common) |

Interconnect | Standard Gigabit/10Gb Ethernet | Ultra-High Speed, Low Latency (InfiniBand, 200/400GbE) |

Storage Access | Direct-attached or SAN/NAS | Massive Parallel File System Access |

Cooling | Standard air cooling | Often Advanced Air or Liquid Cooling |

Density | Moderate | Very High (maximizing compute per rack unit) |

📝 Why Do We Need HPC? Solving the Unsolvable (HPC Applications)

What is HPC enabling? Its applications are revolutionizing nearly every field:

Scientific Research (Scientific Computing):

Climate Modeling: Simulating complex climate systems decades into the future to understand climate change impacts.

Astrophysics: Simulating galaxy formation, neutron star collisions, and black hole dynamics.

Molecular Dynamics/Drug Discovery: Simulating interactions between molecules to design new drugs and materials, drastically reducing lab time and cost.

Genomics & Bioinformatics: Analyzing massive DNA datasets for personalized medicine, understanding diseases, and tracing evolution.

Engineering & Product Design (CAE):

Computational Fluid Dynamics (CFD): Simulating airflow over aircraft wings, combustion in engines, or weather patterns.

Finite Element Analysis (FEA): Simulating stress, vibration, heat transfer, and crashworthiness in everything from buildings to cars to microchips.

Electronics Design Automation (EDA): Designing and verifying complex semiconductor chips.

Artificial Intelligence & Machine Learning (AI/ML Workloads):

Training Large Models: HPC clusters, especially those packed with GPUs, are essential for training the massive deep learning models behind breakthroughs in natural language processing (ChatGPT, etc.), computer vision, and recommendation systems.

Large-Scale Inference: Running trained models on vast datasets for real-time or near-real-time insights.

Data Analytics & Big Data (Data-Intensive Computing):

Financial Modeling: Running complex risk simulations and high-frequency trading algorithms.

Energy Exploration: Processing seismic data to locate oil and gas reserves.

Logistics & Supply Chain: Optimizing massive, complex global networks.

Government & Defense:

Cryptography: Breaking and designing complex codes.

Nuclear Simulation: Maintaining nuclear stockpiles without physical testing.

Intelligence Analysis: Processing vast amounts of surveillance and signal data.

📝 HPC vs. Cloud Computing vs. Supercomputing: Clearing the Confusion

HPC: Refers to the approach and technology of aggregated computing power for solving large problems, primarily using parallelism. It can be deployed on-premises, in private clouds, or accessed via public cloud HPC services (like AWS ParallelCluster, Azure CycleCloud, Google Cloud HPC Toolkit).

Supercomputing: Typically refers to the largest, most powerful, and often unique HPC systems in the world, frequently found in national labs and research institutions. They push the absolute boundaries of computing power (operating at the PetaFLOPS and ExaFLOPS scale). Think Summit, Fugaku, or Frontier. All supercomputers are HPC systems, but not all HPC clusters are supercomputers.

Cloud Computing: A delivery model for computing resources (servers, storage, networking, software) over the internet, typically on-demand and pay-as-you-go. Cloud platforms now offer robust HPC services, making high-performance resources more accessible without massive upfront investment in physical infrastructure.

📝 The Building Blocks of Speed: Processors, Interconnects, and Optical Modules

Achieving HPC's incredible performance relies heavily on cutting-edge hardware:

CPUs: Remain vital for general-purpose tasks and managing workflows. High core count (64, 96, 128+ cores) and support for wide vector instructions (like AVX-512) are key. AMD EPYC and Intel Xeon Scalable dominate this space.

GPUs/Accelerators: Have become indispensable for parallel workloads. NVIDIA GPUs (A100, H100) are currently dominant in HPC/AI, but alternatives like AMD Instinct MI series and specialized AI chips (Cerebras, Graphcore, SambaNova) are gaining ground. They deliver orders of magnitude more FLOPS than CPUs for suitable tasks.

Interconnects: As mentioned, low latency and high bandwidth are paramount. InfiniBand (HDR, NDR) has traditionally led in performance, but Ethernet (200GbE, 400GbE, soon 800GbE) is catching up rapidly with technologies like RDMA over Converged Ethernet (RoCE) reducing latency. The choice significantly impacts application performance, especially for tightly coupled simulations.

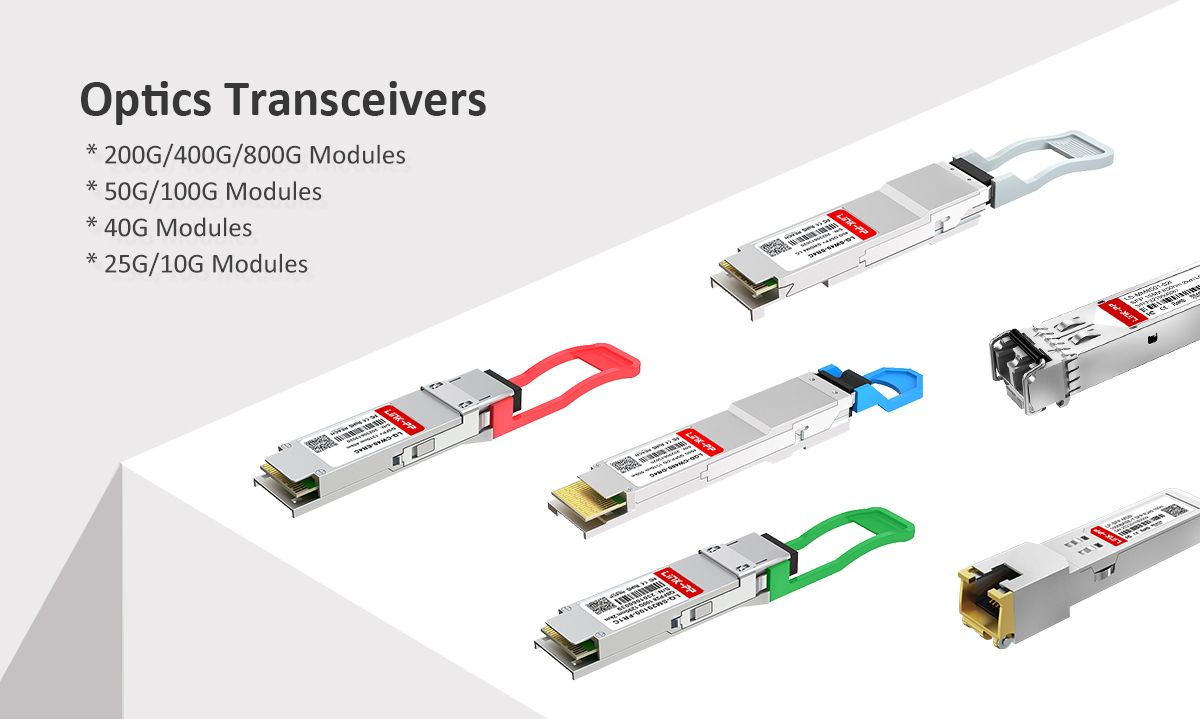

The Role of Optical Modules: These small but crucial components (optical transceivers) convert electrical signals from switches and adapters into optical signals for transmission over fiber optic cables. They are the workhorses of the high-speed interconnect. Demanding HPC environments require the latest generation, highly reliable modules:

Speed: 200G (QSFP56), 400G (QSFP-DD, OSFP), 800G.

Reach: SR (Short Reach), DR (500m), FR (2km), LR (10km) depending on cluster size.

Reliability & Low Power: Essential for dense deployments and minimizing operational costs.

Brands like LINK-PP provide critical optical connectivity solutions that ensure the backbone of the HPC network performs flawlessly under heavy load. Key models for modern HPC include:

LINK-PP QSFP56-200G-SR4: Ideal for intra-rack or short top-of-rack connections.

LINK-PP QSFP-DD-400G-LR4/DR4/FR4: For next-generation 400G fabrics.

Table 2: Common HPC Interconnect Technologies & Optical Module Types

Interconnect Standard | Speed Per Port | Common Form Factors | Typical Optical Modules (Examples) | Key Use Case in HPC |

|---|---|---|---|---|

InfiniBand HDR | 200Gbps | QSFP56 | HDR 200G SR4, HDR 200G DR4 | High-performance tightly coupled clusters |

InfiniBand NDR | 400Gbps | QSFP-DD, OSFP | NDR 400G FR4, NDR 400G LR4 | Next-gen Exascale systems |

Ethernet 200GbE | 200Gbps | QSFP56 | 200G-SR4, 200G-DR4, 200G-FR4 | General HPC, AI/ML, Cloud HPC |

Ethernet 400GbE | 400Gbps | QSFP-DD, OSFP | 400G-SR8, 400G-DR4, 400G-FR4 | Next-gen HPC, Large-scale AI training |

Ethernet 800GbE | 800Gbps | QSFP-DD800, OSFP | 800G-SR8, 800G-DR8 (Emerging) | Future Exascale+ systems |

📝 The Future of HPC: Exascale and Beyond (HPC Trends)

We've entered the Exascale era, where systems can perform a quintillion (10^18) calculations per second (ExaFLOPS). Projects like the US's Frontier and Aurora, Europe's LUMI and Leonardo, and Japan's Fugaku are leading this charge. But what's next?

Zettaflop Ambitions (10^21 FLOPS): Research is already looking beyond exascale.

Convergence of HPC, AI, and Big Data (HPDA): Boundaries are blurring. HPC techniques accelerate AI, AI enhances HPC simulations, and both require massive data handling.

Quantum Computing Integration: Exploring hybrid models where quantum processors handle specific sub-tasks within larger classical HPC workflows.

Advanced AI Accelerators: Continued specialization of hardware for AI workloads integrated into HPC systems.

Sustainability: Power consumption is a massive challenge. Future HPC demands revolutionary improvements in energy efficiency (FLOPS per Watt) through better chips, advanced cooling (immersion cooling), and smarter software. (Keywords: exascale computing, HPC trends, future of HPC, green HPC)

Democratization via Cloud: Cloud HPC will continue to make these powerful resources accessible to smaller companies and research groups.

🔵 Ready to harness the power of HPC for your most demanding challenges? LINK-PP provides cutting-edge optical connectivity solutions essential for building high-performance, reliable HPC infrastructure. [Discover LINK-PP's HPC Optical Modules ➞]

📝 Conclusion: HPC - The Indispensable Engine of Progress

So, what is HPC? It's far more than just fast computers. High Performance Computing is the fundamental infrastructure enabling us to push the boundaries of human knowledge and technological capability. From unlocking the secrets of the universe and developing life-saving medicines to designing revolutionary products and training transformative AI, HPC is the indispensable engine driving progress in the 21st century. As we venture into the exascale era and beyond, fueled by advancements in processors, accelerators, and crucially, high-speed interconnects and optical modules from providers like LINK-PP, the potential of HPC to solve even greater challenges and unlock new possibilities is truly limitless.