★ Introduction: Why Optical Modules Matter in GPU Clusters

GPU clusters are the backbone of modern high-performance computing (HPC) and AI workloads. They consist of multiple GPU nodes working in parallel to process massive datasets. Efficient node-to-node communication is crucial, as data must flow seamlessly between GPUs to maximize computational performance.

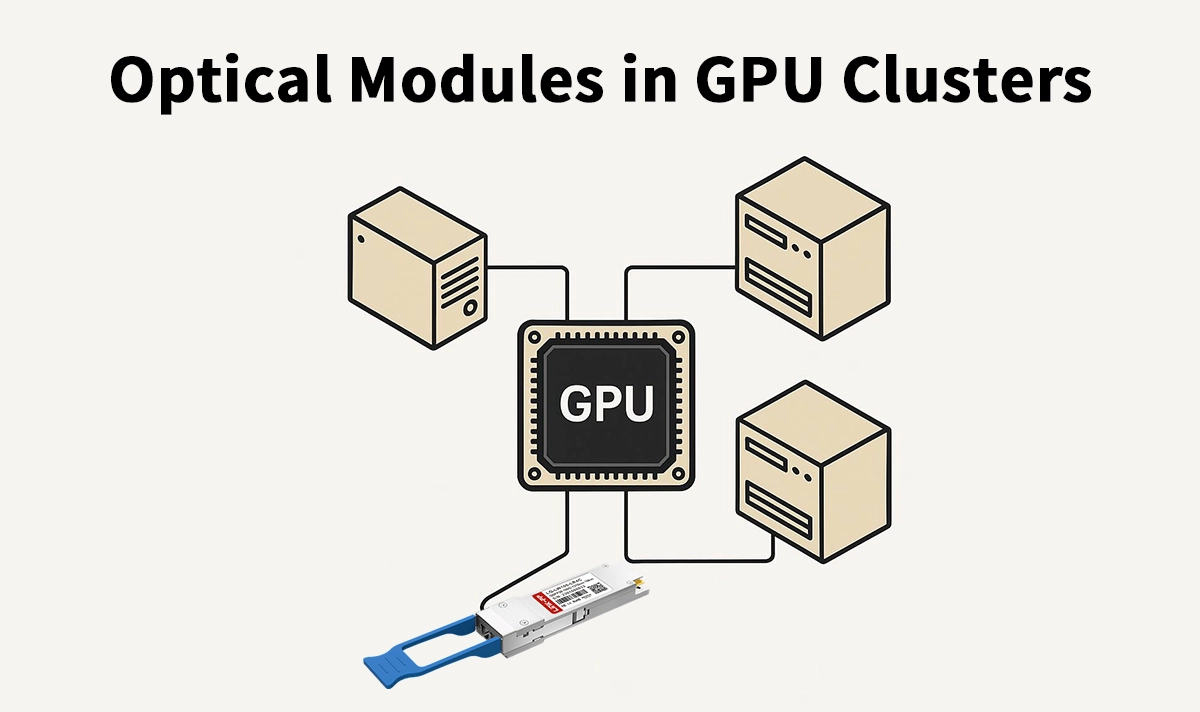

Optical modules—including SFP, QSFP, and CWDM series—serve as the core components enabling this high-speed, high-bandwidth, and long-distance connectivity. Without them, even the most powerful GPU clusters would be bottlenecked by network limitations.

★ How Optical Modules Enable GPU Cluster Performance

1. High-Speed Data Transmission

GPU clusters generate enormous amounts of data during computation and AI model training. Optical modules provide 10G, 25G, 40G, and 100G connectivity, ensuring data moves quickly between nodes with minimal latency.

2. Long-Distance Communication

For data centers or distributed HPC environments, GPUs may be separated by several kilometers. Optical modules, especially CWDM (Coarse Wavelength Division Multiplexing) modules, enable long-distance transmission without signal degradation, supporting inter-rack and inter-datacenter links.

3. High Bandwidth for Parallel Processing

Large-scale GPU computations demand substantial network bandwidth. QSFP modules are commonly used in HPC clusters to provide aggregated bandwidths of 40G or more, allowing multiple GPUs to exchange data simultaneously without congestion.

4. Reliability and Low Latency

Optical modules are engineered for low error rates and stable signal transmission. In GPU clusters, where milliseconds matter for AI inference and HPC simulations, these modules ensure consistent and predictable performance.

★ Common Types of Optical Modules for GPU Clusters

SFP (Small Form-factor Pluggable): Compact, widely used for 1G–10G interconnects. Ideal for smaller GPU clusters or edge nodes.

QSFP (Quad Small Form-factor Pluggable): Supports 40G–400G, commonly deployed in large-scale HPC and AI clusters for high-bandwidth interconnects.

CWDM (Coarse Wavelength Division Multiplexing): Extends network reach over long distances with multiple wavelength channels, suitable for distributed GPU data centers.

★ LINK-PP Optical Modules for GPU Cluster Networking

LINK-PP offers a comprehensive range of optical modules optimized for high-performance, low-latency, and high-bandwidth GPU cluster networks:

SFP and QSFP modules for flexible cluster interconnects.

CWDM modules for long-distance GPU node communication.

Fully compatible with mainstream networking equipment in data centers.

These products help engineers and IT managers maximize GPU cluster efficiency and reliability.

Conclusion

In GPU clusters, where AI training and HPC workloads demand extreme performance, optical modules are indispensable. By enabling high-speed, high-bandwidth, and long-distance connectivity, SFP, QSFP, and CWDM modules allow GPU nodes to communicate efficiently, ensuring that computational power is fully utilized.

Explore LINK-PP’s optical modules to build high-performance GPU cluster networks with reliable, high-speed connectivity.