Modern data centers are the unsung heroes of our connected world, powering everything from cloud computing and AI to streaming services and financial transactions. At the core of this digital ecosystem are data center transceivers—the critical components that convert and transmit data as pulses of light across fiber optic cables.

But not all transceivers are created equal. Two fundamental metrics dictate their performance and, by extension, the health of the entire data center: Signal Integrity (SI) and Low Latency. In this article, we'll explore why these factors are paramount and how they impact everything from user experience to operational costs.

➤ Key Takeaways

Signal integrity makes sure data signals are clear and strong. Good signal integrity stops mistakes and helps your network work well.

Low latency is very important for real-time apps. It lets things happen fast, which makes video calls, games, and trading better.

Advanced transceivers can help cut down delays. Pick devices that have low latency and high speed to make things work better.

You need to take care of cables and connections often. Clean and check your equipment a lot to keep signal integrity strong.

Energy-efficient transceivers use less power. This helps your data center stay cool and saves you money on energy.

➤ Understanding Signal Integrity: The Clarity of the Conversation

Signal Integrity (SI) refers to the quality and fidelity of an electrical or optical signal as it travels from a transmitter to a receiver. Think of it as having a crystal-clear phone call versus one filled with static and dropouts.

In the context of high-speed data center interconnects (DCI), a signal with poor integrity is distorted, leading to data errors. Key enemies of SI include:

Attenuation: Loss of signal strength over distance.

Jitter: Timing variations in the signal clock, which can smear data bits.

Crosstalk: Unwanted interference from adjacent channels or cables.

Reflections: Signals bouncing back due to impedance mismatches.

When SI is compromised, the system's Bit Error Rate (BER) increases. The network must then retransmit corrupted data packets, consuming valuable bandwidth, increasing power consumption, and ultimately slowing down the entire operation. For applications like real-time analytics or high-frequency trading, this is simply unacceptable.

➤ The Critical Need for Low Latency: The Need for Speed

Latency is the time delay between the moment a data packet is sent and when it is received. Low latency is the goal of minimizing this delay.

Why does this matter so much? Let's look at a comparison of latency-sensitive applications:

Application | Latency Requirement | Consequence of High Latency |

|---|---|---|

High-Frequency Trading | Microseconds (µs) | Millions in lost arbitrage opportunities. |

Online Gaming & eSports | Milliseconds (ms) | "Lag" causing a poor user experience and competitive disadvantage. |

AI/ML Model Training | Nanoseconds (ns) per hop | Drastically increased total training time for complex models. |

Virtual/Augmented Reality | < 20ms | Motion sickness and a broken sense of immersion. |

Real-Time Database Replication | Milliseconds (ms) | Data inconsistency and potential service outages. |

Achieving ultra-low latency isn't just about raw speed; it's about designing every component in the data path—especially the transceivers—for minimal processing delay.

➤ The Convergence: Why SI and Low Latency are Inseparable in Transceivers

In data center transceivers, signal integrity and low latency are two sides of the same coin. You cannot reliably have one without the other.

Poor SI Increases Effective Latency: When a signal is degraded and errors occur, the system must detect the error and request a retransmission. This entire process—detection, request, and resend—adds significant latency. A transceiver with excellent SI minimizes these retransmissions, ensuring data gets through correctly the first time.

High-Speed Demands Flawless SI: As data rates escalate from 100G to 400G, 800G, and beyond, the tolerances for signal distortion become incredibly tight. The electrical and optical signal integrity of a transceiver determines the maximum achievable data rate at a given BER. A robust transceiver design is what enables reliable 400G data center deployment without sacrificing performance.

This is where the engineering excellence of a manufacturer becomes critical. Companies like LINK-PP focus on designing transceivers where the internal components, laser drivers, and DSP (Digital Signal Processing) chips are optimized to work in harmony, preserving signal clarity and minimizing every nanosecond of delay.

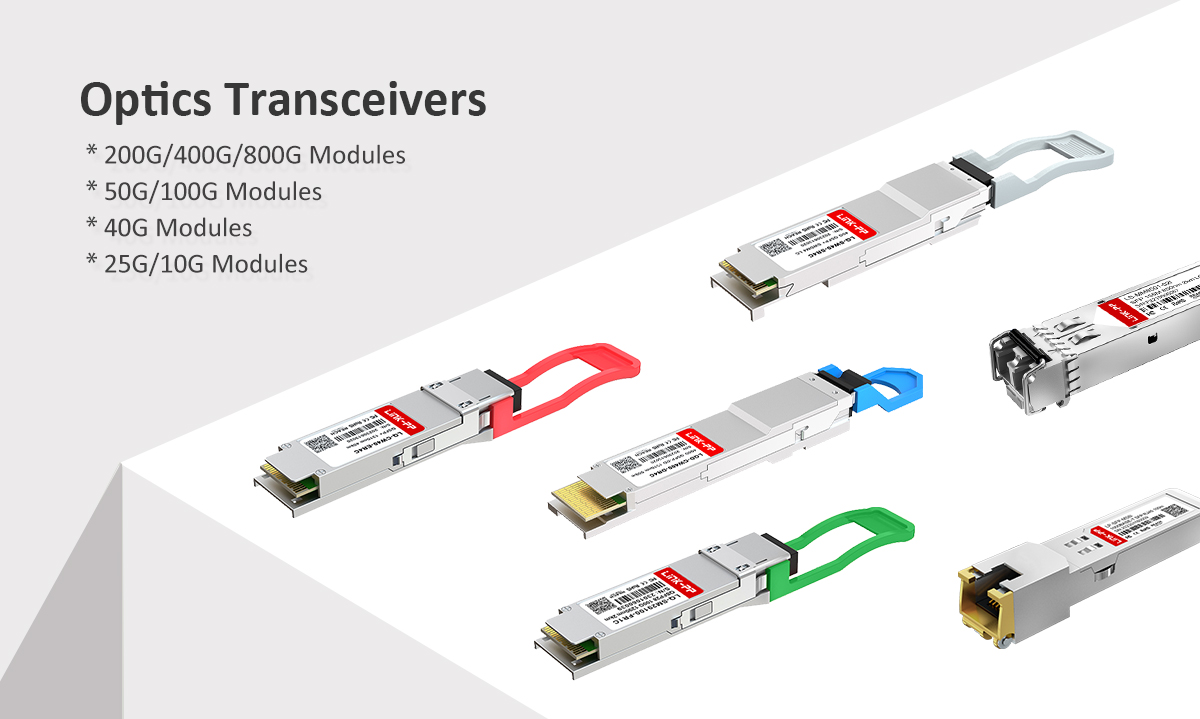

➤ The Role of Optical Transceiver Modules

The Engine of Data Transmission

Optical transceiver modules are the workhorses that implement the conversion between electrical signals (from switches/servers) and optical signals (for fiber transmission). They are a primary battleground in the fight for superior SI and low latency.

A high-quality optical module ensures:

Clean Signal Generation: Precise lasers and modulators produce a stable optical signal with minimal jitter and noise.

Efficient Reception: High-sensitivity photodiodes accurately convert faint light signals back into clean electrical data.

Minimal Power Consumption: Advanced designs run cooler and use less power, which is a key consideration for data center power efficiency and total cost of ownership.

Case in Point: The LINK-PP 400G-FR4 Transceiver

When discussing modules that excel in both signal integrity and low latency, the LINK-PP 400G-FR4 is a prime example. This QSFP-DD form factor transceiver is engineered for high-performance data centers.

Here’s how it addresses our core themes:

Superior Signal Integrity: It incorporates a sophisticated DSP that actively compensates for signal impairments like chromatic dispersion, ensuring a clear and reliable link over standard single-mode fiber up to 2km.

Ultra-Low Latency: The LINK-PP 400G-FR4 is designed with a cut-through architecture, minimizing processing delays. This makes it an ideal solution for low latency cloud computing and high-performance computing (HPC) clusters.

Interoperability & Reliability: Built to meet rigorous MSA (Multi-Source Agreement) standards, it ensures seamless compatibility with major networking hardware, providing peace of mind for network architects.

Integrating such purpose-built modules is a strategic move for anyone looking to optimize their infrastructure for the demands of modern AI and machine learning workloads, where fast, error-free data movement is the lifeblood of the system.

➤ Best Practices for Optimizing Your Transceiver Performance

Selecting the right transceiver is the first step. Ensuring it performs optimally requires a holistic approach.

✅ Prioritize Quality and Compliance: Always use transceivers from reputable manufacturers that comply with industry standards. This prevents compatibility issues and ensures baseline performance.

✅ Monitor Key Performance Indicators (KPIs): Use your network management system to monitor transceiver metrics like Tx/Rx Power, Bias Current, and Temperature. Sudden changes can indicate impending SI issues.

✅ Choose the Right Fiber and Connectors: The physical layer matters. Use high-quality fiber optic cables with clean connectors to minimize insertion loss and back reflections.

✅ Plan for the Future: When upgrading, consider transceivers that support the next speed tier. A LINK-PP 400G module today provides a solid foundation for future 800G migration, protecting your investment.

➤ Conclusion: Building a Faster, More Reliable Foundation

In the relentless pursuit of faster data centers, signal integrity and low latency are not mere features—they are the foundation. They directly influence application performance, user satisfaction, and the bottom line.

As technologies like 5G, AI, and the metaverse continue to evolve, the demand for transceivers that can deliver flawless data transmission at lightning speed will only intensify. By investing in high-performance, expertly engineered optical modules like those from LINK-PP, businesses can build a network infrastructure that is not just ready for today's challenges, but also poised to embrace tomorrow's opportunities.

Ready to optimize your data center's performance? The journey begins with a deep understanding of the critical components at its heart.