In the world of high-performance computing (HPC), data centers, and AI, speed is everything. Every microsecond of latency and every CPU cycle counts. But traditional network protocols like TCP/IP create a significant bottleneck, forcing CPUs to spend precious time processing network traffic instead of running applications.

This is where a revolutionary technology comes into play: Remote Direct Memory Access (RDMA). It's the powerhouse behind modern, low-latency, high-throughput networks. Let's dive into what RDMA is, why it matters, and how it's shaping the future of data-intensive technologies.

💡 Key Takeaways

RDMA lets computers send data fast without using the CPU or operating system. This makes things quicker and helps more data move at once.

Zero-copy networking is an important part of RDMA. It lets data go straight from one computer’s memory to another. This saves time and does not use much CPU power.

RDMA works well for high-performance computing, AI, and cloud apps. It can move a lot of data quickly and easily.

You need special hardware, like RDMA network cards, for RDMA to work. Make sure your devices can use RDMA before you start.

Think about RDMA’s good points, like better speed and saving resources, when you choose if it fits your network.

💡 What Exactly is RDMA? The Core Concept

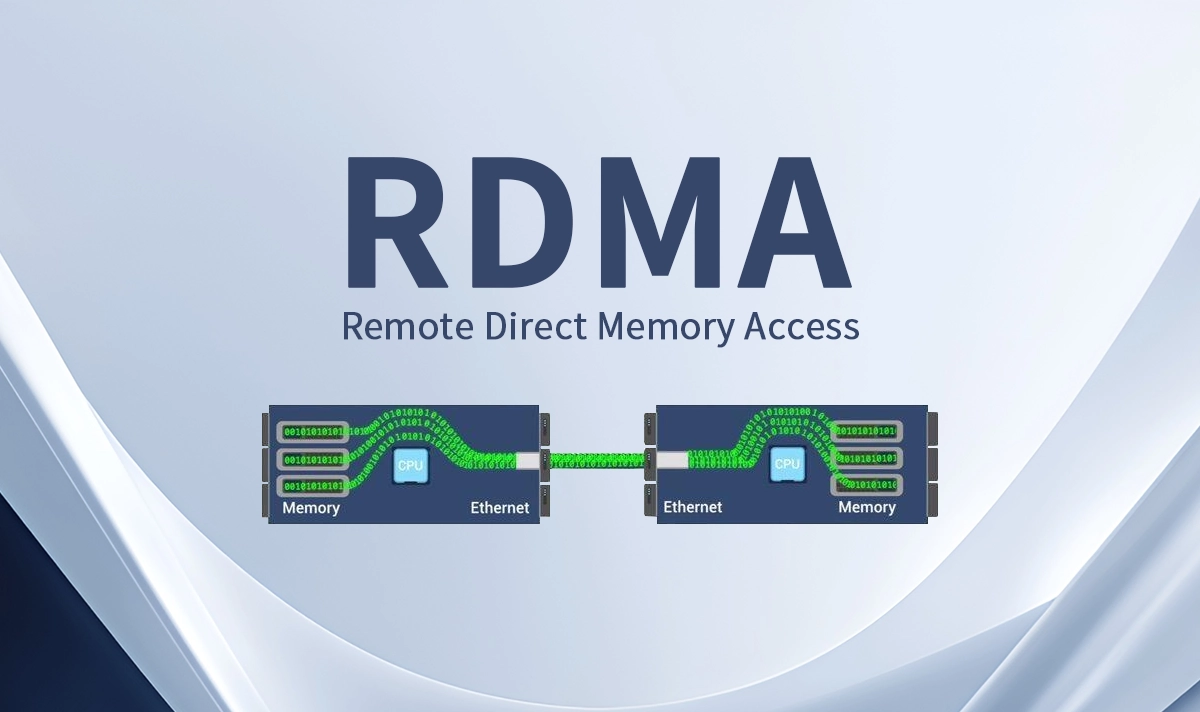

At its heart, RDMA (Remote Direct Memory Access) is a networking technology that allows computers to exchange data directly between their main memories without involving their operating systems, CPUs, or cache.

Think of it like this:

Traditional Networking: A package (data) must go through the central mail sorting facility (CPU) to be processed, labeled, and then sent out. This takes time and resources.

RDMA Networking: The package is handed directly from one person's hand (memory) to another's across the street, with no middlemen. It's incredibly fast and efficient.

This "kernel bypass" is the magic that eliminates the major overhead of traditional networking.

How Does RDMA Work? The Technical Magic

RDMA operates through a dedicated Network Interface Card (NIC) often called an RDMA-enabled NIC or Host Channel Adapter (HCA). This specialized hardware handles the data transfer operations independently.

The process involves one computer (the requester) directly reading from or writing to the memory of another computer (the responder), after a secure connection has been established. All of this is managed by the HCAs on each end, with minimal CPU involvement.

💡 Why is RDMA a Game-Changer? Key Benefits & Advantages

Adopting RDMA technology offers profound advantages for performance-hungry environments:

🔥 Extremely Low Latency: By bypassing the CPU and kernel, data transfer delays are drastically reduced.

⚡ High Throughput: RDMA enables near-wire-speed data transfer, maximizing the bandwidth of your network infrastructure.

💪 Reduced CPU Overhead: The CPU is freed from network processing tasks, allowing it to dedicate all its power to running applications and services.

🚀 Higher Efficiency & Scalability: Systems can handle more data and more connections without being bogged down by network protocol processing.

💡 RDMA Transport Protocols: InfiniBand, RoCE, and iWARP

RDMA isn't just one standard; it can run over different physical networks. Here’s a quick comparison of the three primary transports:

Protocol | Full Name | Network Medium | Key Characteristics |

|---|---|---|---|

InfiniBand | — | Native InfiniBand Fabric | ⭐ Native RDMA. Highest performance, lowest latency. Requires specialized InfiniBand switches. |

RoCE | RDMA over Converged Ethernet | Ethernet | 🚀 RDMA over Ethernet. Popular choice for modern data centers. Comes in two versions: RoCEv1 (link-layer) and RoCEv2 (routable over IP). |

iWARP | Internet Wide-Area RDMA Protocol | Ethernet (TCP) | 🌐 RDMA over TCP/IP. Designed to work over standard, lossy networks like WANs. Simpler configuration than RoCE but can have higher latency. |

RoCE (particularly RoCEv2) has become a dominant force in enterprise data centers as it leverages ubiquitous Ethernet infrastructure while delivering near-InfiniBand performance.

💡 Where is RDMA Used? Real-World Applications

RDMA isn't just a lab technology; it's critical for today's most demanding workloads:

AI and Machine Learning (ML): Training complex models requires moving massive datasets between servers and GPUs at incredible speeds.

High-Performance Computing (HPC): Scientific simulations and modeling rely on fast message passing between compute nodes (e.g., in MPI applications).

Hyperconverged Infrastructure (HCI) & Storage: Technologies like VMware vSAN, Azure Stack HCI, and storage solutions use RDMA for ultra-fast storage networking and disaggregated storage.

Cloud Data Centers: Major providers like Google, AWS, and Microsoft use RDMA to improve efficiency and performance for their internal infrastructure and cloud services.

💡 The Critical Role of High-Speed Optics in RDMA

An RDMA network is only as fast as its slowest component. High-speed optical transceivers are the unsung heroes that physically enable these lightning-fast connections over fiber optic cables. For an RDMA network to achieve its full potential, especially on 100G, 200G, or 400G links, you need reliable, high-performance optics.

This is where a brand like LINK-PP excels. LINK-PP optical transceivers are engineered for maximum performance and compatibility, ensuring that your RDMA-enabled network operates at peak efficiency without bottlenecks.

For a 400G RoCE deployment, a module like the LINK-PP 400G-ZR4+ coherent pluggable transceiver is an excellent choice for long-haul interconnects, providing the reach and signal integrity necessary for a robust infrastructure.

💡 Conclusion: RDMA is the Future of Data Center Networking

RDMA has evolved from a niche technology to a fundamental pillar of modern data center design. Its ability to provide low-latency networking, high bandwidth, and exceptional CPU efficiency makes it indispensable for AI, HPC, and large-scale storage environments.

To build a true end-to-end high-performance network, pairing RDMA-enabled NICs and switches with top-tier optical components from experts like LINK-PP is not just an option—it's a necessity.

💡 FAQ

What is RDMA used for?

You use RDMA to move data quickly between computers. It helps with cloud computing, AI, and storage networks. You get faster speeds and lower wait times than with older network methods.

What hardware do you need for RDMA?

You need RDMA-capable network cards and special switches. Regular network cards do not support RDMA. Make sure your servers and network devices work with RDMA before you upgrade.

What makes RDMA different from regular networking?

RDMA lets you send data straight from one computer’s memory to another. You skip the CPU and operating system. Regular networking uses more steps and takes longer.

What is the internet wide area RDMA protocol?

The internet wide area RDMA protocol allows you to use RDMA over long distances. You can connect computers in different locations and still get fast data movement.

What are the main benefits of RDMA?

You get high speed, low latency, and less CPU usage. RDMA helps your systems run more jobs at the same time. Your network works better for big data and real-time tasks.

💡 See Also

The Importance of ROADM in Modern Cloud Networking

The Significance of Digital Monitoring in Optical Transceivers