Artificial intelligence has shifted rapidly from cloud-only execution to on-device and edge computing. A key technology enabling this shift is the NPU — Neural Processing Unit, a dedicated AI accelerator designed to efficiently run neural-network inference on smartphones, IoT devices, automotive platforms, and industrial systems.

While CPUs and GPUs can process AI workloads, modern systems are increasingly architected with specialized neural engines to achieve better latency, energy efficiency, and privacy-preserving AI compute. This article explains what NPUs are, how they differ from CPUs/GPUs/TPUs, and where they fit in next-generation computing.

1️⃣. What Is an NPU (Neural Processing Unit)?

Purpose-Built AI Compute Engine

An NPU (Neural Processing Unit) is a domain-specific processor optimized for neural-network computations — particularly matrix multiplication, convolution operations, and activation functions. NPUs accelerate inference workloads such as computer vision, audio processing, natural language tasks, and sensor fusion.

Core Architectural Traits

Parallel compute units optimized for tensor math

On-chip memory to reduce data-movement overhead

Low-precision arithmetic (INT8 / INT4 / BF16) for higher efficiency

Dedicated pipelines for common neural layers and operators

In essence, an NPU enables real-time, low-power AI processing close to where data is generated.

2️⃣. Why NPUs Matter for Modern AI Systems

Key Advantages

Benefit | Description |

|---|---|

High energy efficiency | More AI operations per watt than CPU/GPU |

Low inference latency | Real-time response for safety-critical AI |

Privacy & security | Data processed locally, not sent to the cloud |

Offline intelligence | AI functions without internet access |

Typical NPU Capabilities

Image segmentation & object detection

Speech recognition & on-device translation

Sensor analytics for robotics & wearables

Driver-assist perception pipelines in vehicles

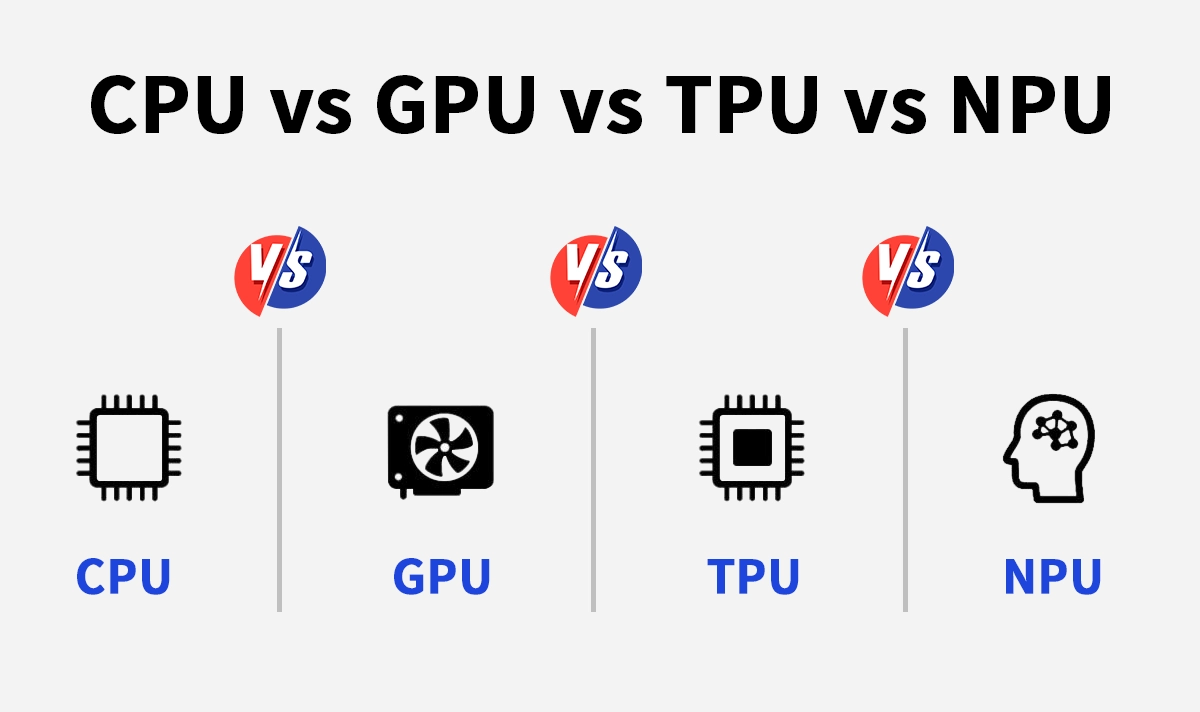

3️⃣. NPU vs CPU vs GPU vs TPU

Component | Purpose | Strength | Typical Location |

|---|---|---|---|

General compute | Control logic & OS tasks | Universal | |

Parallel compute | Training & graphics | Cloud, PC, edge | |

NPU | Neural inference | Low-latency, efficient AI | Mobile, IoT, edge devices |

Tensor compute | Large-scale training/inference | Cloud (Google) |

Key difference:

GPU = high-flexibility, high-throughput compute

NPU = fixed-function, high-efficiency neural compute

4️⃣. How Does an NPU Work?

Key Components

Tensor compute units

On-chip SRAM / unified memory

DMA and data-reuse pipelines

Quantization and activation engines

Neural control logic

Supported AI Workloads

Image recognition & object detection

Natural language processing

Voice and speech recognition

Sensor fusion for robotics and vehicles

Generative AI and local vision processing

Many NPUs also support INT8, FP16, and mixed-precision arithmetic for higher throughput.

5️⃣. Common Devices Using NPUs

Segment | Examples |

|---|---|

Smartphones | Apple Neural Engine, Qualcomm Hexagon DSP, Kirin NPU |

Edge AI Gateways | Nvidia Jetson, Intel Movidius VPU |

Industrial Systems | Smart PLCs, industrial cameras |

Automotive | ADAS, autonomous driving SoCs |

Consumer | Smart speakers, AR/VR glasses, robots |

6️⃣. NPU & Edge Networking — Why Connectivity Matters

Edge AI systems often integrate network interfaces to stream data, update models, or communicate decisions.

Reliable wired networking is widely used in:

Industrial automation

AI vision systems (PoE cameras)

Smart access points & IoT hubs

Edge servers and gateways

RJ45 MagJacks for AI Edge Devices

For AI gateways and embedded computing modules, integrated RJ45 connectors provide:

Stable Ethernet connectivity

PoE/PoE+ power for cameras and sensors

EMI shielding and signal integrity

Compact modular design

Example features:

10/100/1000Mbps Ethernet support

PoE options for smart edge devices

Designed for embedded and networking systems

7️⃣. Conclusion

NPUs are redefining computing architecture by enabling fast, power-efficient AI inference at the edge. As more systems run neural workloads locally, NPUs will sit alongside CPUs and GPUs as a core component in modern processing pipelines.

From smartphones to smart factories, the Neural Processing Unit is enabling a new era of real-time, secure, low-latency AI deployment.